Presentation

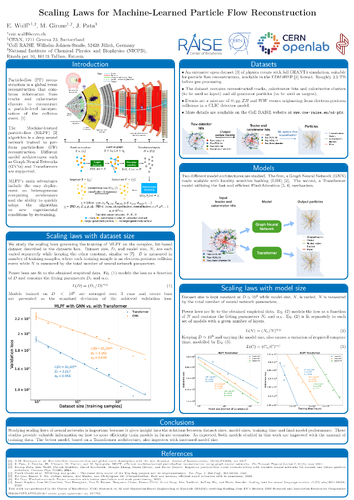

P46 - Scaling Laws for Machine-Learned Reconstruction

Presenter

DescriptionMachine Learning (ML) methods have been successfully applied to various High Energy Physics (HEP) problems, such as particle identification, event reconstruction, jet tagging, and anomaly detection. However, the relationship between the model size, i.e., the number of model parameters, and the physics performance for different HEP tasks is not well understood. In this work, we empirically determine the scaling laws for different commonly used ML model architectures such as Graph Neural Networks (GNNs) and Transformers on a challenging ML problem from HEP with the goal of finding how much physics performance can be gained by increasing the model size as opposed to investigating more complex model architectures. We also take memory usage and computational complexity, which is not directly related to model size, into account. High Performance Computing resources are used to train and optimize the models on large-scale HEP datasets for supervised learning. We evaluate the model performance in terms of accuracy, efficiency, and inference speed. We also observe that the optimal model size varies depending on the complexity and structure of the input data. Our work demonstrates the potential and challenges of applying ML methods to HEP problems, and contributes to the advancement of both fields.

TimeTuesday, June 49:58 - 9:59 CEST

LocationHG F 30 Audi Max

Session Chair

Event Type

Poster